TeamAware Visual Scene Analysis System

The main objective of Visual Scene Analysis System (VSAS) is to provide vision-based solutions that will help First Responders (FRs) before or during their interventions. Depending on targeted use cases, these vision-based solutions will be embedded within two different subsystems: the “head mounted camera” subsystem (called the “helmet” subsystem) and the “UAV mounted camera” subsystem (called the “UAV payload” subsystem). These subsystems will be linked through dedicated radios to a ground subsystem acting as a gateway.

Targeted emergency scenario

Typical emergency scenarios that vision-based subsystems are targeted for are the following ones:

Exploration of a building with a UAV before FRs intervention: Before operating in a potential hazardous building, FRs will use a dedicated small UAV to explore the building. FRs will use a dedicated Radio Command (RC) to pilot the UAV. The RC will have a video feedback. During such exploration, a piloting-aid system will help the non-expert pilot to control the UAV within potential complex indoor environment, thanks to a real-time on-board analysis that will prevent the UAV to collide with obstacles.

FRs or UAV localisation during their intervention: During FRs intervention or UAV exploration, the knowledge of their 3D position on a map is a key functionality for the person in charge of monitoring the evolution of the situation. This scenario intend to show the localisation of any FR or UAV equipped with the dedicated hardware based on GNSS-information when available and based on magneto-visuo-inertial fused data when the FRs or the UAV is for example inside a building (i.e. in a GNSS-denied environment).

Semantic Mapping: During UAV exploration, it is worth automatically collecting information that may help FRs to better understand the situation or to plan their future intervention. To this aim, semantic mapping may be a real benefit. This functionality may run on on-board or on-ground HW systems. 2D semantic mapping enables FRs to have a better situational awareness by analysing the map on which semantic information (e.g. presence of chemical products) are displayed. The semantic information to detect has to be defined.

Victim detection and localisation: During UAV exploration, a nice-to-have functionality is to automatically detect and localise victims within the built map of the building, so that FRs can recue them as fast as possible. This functionality may run on on-board or on-ground HW system.

Guide FRs to join other FRs already in the building / Guide FRs to come out from a building: First Responder localisation may be also used for other scenarios such as the following:

- Having an estimation of the First Responder’s trajectory, guide him/her to come out of the building when required;

- Guide a rescue team to join the First Responder that is already inside the building.

To guide the FRs, visual indication may be displayed on dedicated AR glasses or a voice guiding system may also be used.

Basic technical functionalities

To address these scenarios, the following technical functionalities are required:

A localisation solution for GNSS-denied environment: The solution will be integrated on the dedicated helmet and UAV including an electro optical system with IR and/or stereo camera as well as AR glasses in order to:

- localise the First Responder, in particular in indoor environment

- to guide First Responder to come out from a building

An UAV piloting assistance solution: In order to support a non-expert UAV pilot to navigate within unknown and complex indoor environments, the indoor UAV will be equipped with an electro optical system with a RGB or IR camera and Time-of-Flight ranging sensors (i.e. VCSEL: Vertical-Cavity Surface-Emitting Laser). Specific algorithms will process data coming from the sensors and will prevent the UAV from colliding with the obstacles.

A semantic mapping solution: This module intends to build a 2D map in which semantic information will be added in real-time or a posteriori thanks to the analysis of images collected by the FRs equipped with a camera on its helmet or in the UAV. Mapping will be based on SLAM (Simultaneous Localisation and Mapping) techniques exploiting VCSEL sensors data and semantic information will be extracted thanks to dedicated neural networks.

Victim detection and localisation: The used approach will be based on the OpenPose algorithm (or similar algorithm) that takes into account both the variability of the shapes observed (due to the fact that people are articulated elements) and the presence of partial occlusions. OpenPose is based on a CNN architecture and makes it possible to detect different characteristic points of the human body (joints, eyes, mouth, nose, ears, hands, feet) and, jointly, to group these points in a graph forming a skeleton representation.

The localisation solution will be based on a magneto-visuo-inertial solution, combining magnetic data, inertial data and visual data, thanks to a well-suited fusion algorithm. The localisation solution is an odometry solution that estimate the movement, i.e. the pose (position and orientation) of the subsystem. This kind of solution remains subject to drift. To cancel this drift, it is required to get from time-to-time absolute positions provided by a GNSS sensor when available.

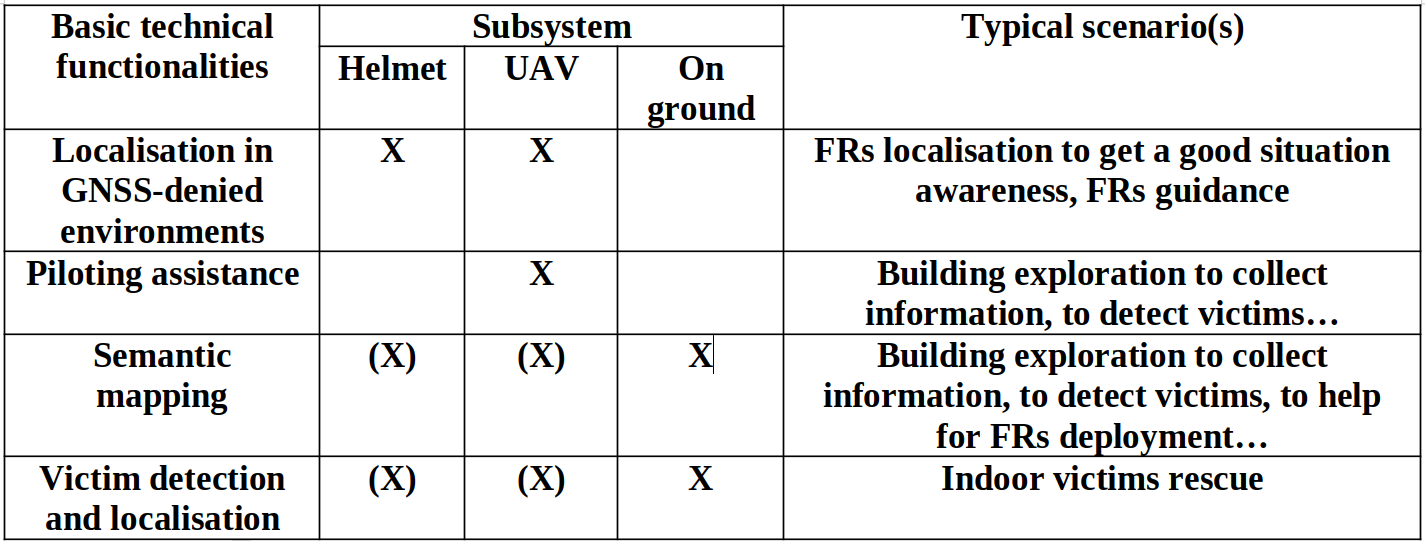

These basic technical functionalities will be embedded on the helmet subsystem or on the UAV subsystem and will target several scenarios as synthetized in Table 1.

Table 1: Basic technical functionalities, subsystems and targeted scenarios

Subsystems appearance

Figure 1: Typical indoor UAV platform

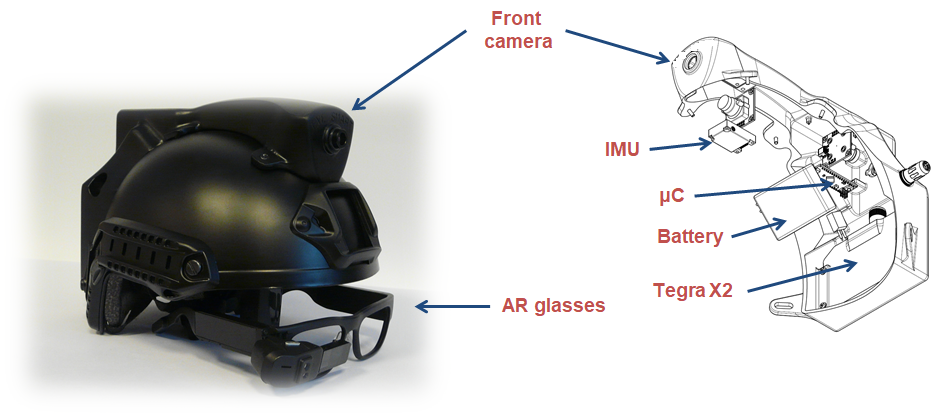

Figure 2: Helmet subsystem (first prototype)

Helmet or UAV subsystems have their own power supply. Typical autonomy is 20 minutes for the UAV and 4 hours for the helmet. The helmet, as well as the UAV, is equipped with a 1500 mAh (3S) battery.

UAV subsystem can be use alone. Helmet subsystem can be used alone, except for guidance scenario where at least two helmets are required. It is possible to use several UAVS or helmet at the same time. The limitation comes from radio bandwidth, in particular for video transmission.

Within TeamAware project, we plan to use during demonstration one UAV and two helmets.