TeamAware Body Motion Analysis System

“The International Forum to Advance First Responder Innovation” (IFAFRI) 2018 Report came up with the following gaps as part of an analysis:

- Real-time remote monitoring of the state and activities of team members

- Real-time identification and monitoring of risks and perils

- Real-time locations of first responder teams (especially indoors)

- Monitoring the health status of first responder teams, and their state of emergency and anomalies

There are a variety of technologies and solutions to eliminate the aforementioned gaps. Equipping first responder teams with instantaneous situational awareness, augmented reality, sensor fusion and network (5G etc.) technologies has grown into a major capability all over the world. The integrated use of motion sensors and indoor positioning systems helps monitor the activities and location of first responders in real time. This raises the bar for the situational awareness of first responders.

Motion Sensors

Motion sensors enable to monitor all body motion of staff members in real time. Motion is classified by artificial intelligence algorithms and one's specific activity can be identified. In addition, anomalies such as exhaustion and blackout can be detected such that risks and perils can be seen through remote monitoring of team activities.

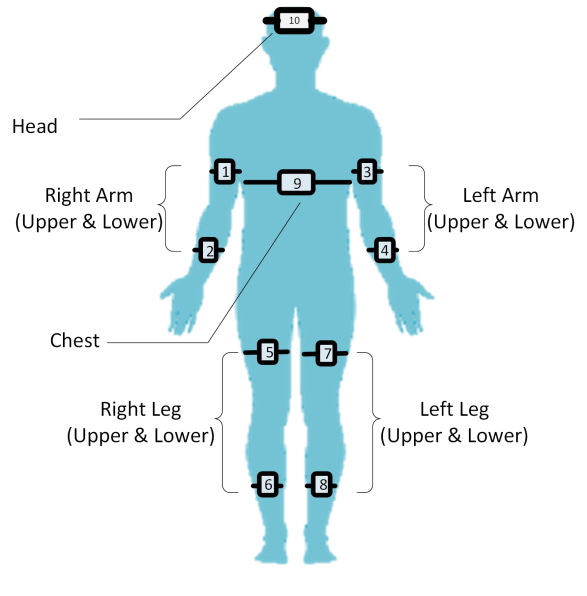

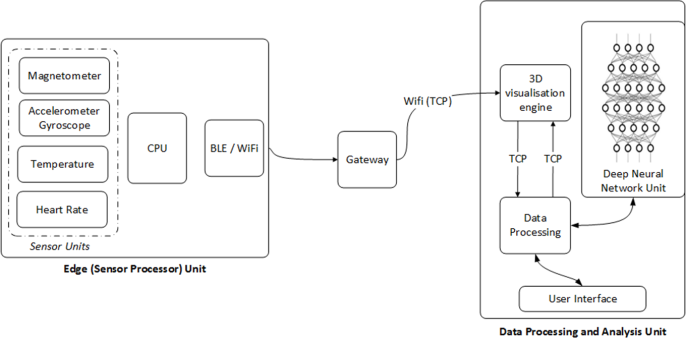

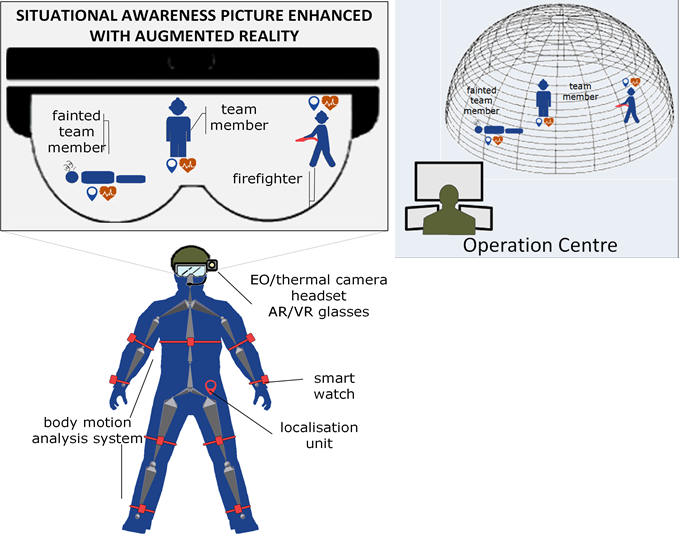

Inertial measurement systems are deployed for remote detection and monitoring of motions of soldiers on the ground. Inertial measurement sensors placed on 10 different spots of the body help detect the posture of one's body in real time and relay that information to an operation center. These sensors are wireless, operating on low energy. Figure 1 shows the main structure of the system.

Of wearable sensors, there are 3-axis accelerometers/tachometers/magnetometers. Collected via BLE, data is exported to a cloud system through a Wi-Fi gateway. Data can then be quickly sorted out by artificial intelligence algorithms operating on a cloud system. This makes it possible to detect a soldier's motions (such as running, walking, ascending stairs, pointing a gun) from afar. This can be monitored instantly through AR glasses and a VR interface in any operation center.

Figure 1: Situational Awareness Based on Wearable Sensors

Situational Awareness Applications

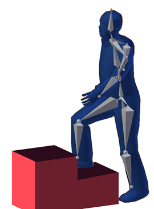

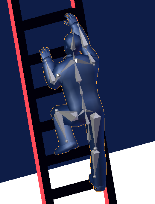

BMAS can be used for situational awareness applications as it has the capability of full-body motion monitoring as well as activity detection. It can be used in first-responder operations to provide situational awareness to the members during the save and rescue. It is significantly important to monitor and know the status of the members in such circumstances. BMAS can provide fatigue analysis, postural information about the first responders lying, sitting, running, walking, holding a hose, abnormal body postures (alerts), etc.

In the scope of situational awareness, BMAS is integrated with indoor/outdoor localization systems and wearables such as smart health monitoring modules, cameras, augmented reality (AR) devices, and virtual reality (VR) devices as illustrated in Figure 31. This system provides continuous monitoring and tracking of the activity, health, and location of each team member. Wearable cameras are also used for scene sharing between team members.

Each member wears AR glasses so that they can see the status of the other members while they can see the operation scene at the same time. In other words, each team member will be aware of what the other members are doing so that they can plan the safe and rescue operation efficiently. Furthermore, they will be able to respond to urgent cases. For example, the team member will be able to see a member lying on the floor or a stuck member under a load with the locations via AR glasses. Hence, the team will be able to respond to this urgent case to rescue the team member. While the AR interfaces are used by the team members, the VR interface is used by the operation center to monitor the overall scene.

Figure 2: BMAS – Situational awareness application

Dr. Tolga SÖNMEZ

Dr. Tolga SÖNMEZ has been graduated from BILKENT University electrical engineering department in 1995. He completed his M.S. and Ph. D. studies in University of Maryland College Park in electrical engineering. During his Ph.D. study he has worked on optimal sensor scheduling and tracking algorithms in sonar applications. After that, he worked in Advanced Acoustic concepts in USA then he continued to work in TÜBITAK Sage in Ankara to develop integrated navigation systems. He recently joined HAVELSAN Inc. as a signal processing and modelling manager to work on sensor fusion projects. His is interested in sensor fusion, sensor networks and integrated navigation systems. He has managed several research and development project in sensor fusion, navigation systems and acoustic signal processing. He also participated in several EU project applications and took part in EU project evaluations as an expert.

PhD candidate Çağlar AKMAN

PhD candidate Çağlar AKMAN has been graduated from Middle East Technical University Electrical and Electronics Engineering Department in 2008. He completed his M.S. degree in robotics area in electrical and electronic engineering at Middle East Technical University in 2017. During his MSc. he has worked on system modelling, sensor arrays, array signal processing, beamforming, multi-object detection and sensor fusion. He is a PhD candidate at Middle East Technical University in robotics field in Electrical and Electronics Engineering department. He joined HAVELSAN Inc. as a system engineer and he is the senior engineer in the modelling and sensor technologies. He has been working on system architecture design, algorithm development for control systems, sensor networks, and sensor fusion as well as embedded system design project. He has taken a role in several international R&D projects as a technical coordinator. He is going to work as the Technical Coordinator in the TeamAware project.